Table of Contents

As Language Learning Models (LLMs) become increasingly popular in various domains, from chatbots to content generation and voice assistants, it becomes imperative to address potential vulnerabilities that these models may present. One such vulnerability is prompt injection or context injection, a situation where unanticipated user inputs could compromise the function or output of an application. This blog post will delve into how this can affect your LLM applications and offer tips for prevention.

Understanding Prompt Injection

Prompt injection is a form of cybersecurity threat specific to systems that use language learning models (LLMs), such as conversational AI, automated responders, or other types of linguistic interface systems. Similar to SQL injection in databases, prompt injection occurs when an external entity, typically a user, introduces (or “injects”) a malicious prompt or input into the LLM’s interface. This action can manipulate the system into executing unintended commands, divulging sensitive information, or altering the natural flow of operations. The fundamental issue arises from the system’s inability to properly distinguish between legitimate and malicious instructions, often due to inadequate input validation protocols.

Prompt injection within the domain of Language Learning Models (LLMs) poses risks analogous to the perils of SQL injection in the sphere of web applications. In both instances, the system, whether it be an LLM or a web application, inadvertently processes malicious inputs, precipitating unforeseen and often detrimental outcomes. For LLMs, these consequences manifest primarily through the generation of unsuitable or offensive responses, a deviation from the model’s expected behavior, and compromises in the ethical use of the technology. Such repercussions not only undermine the model’s reliability but can also erode user trust and credibility, essential aspects of any user-oriented service.

The severity of prompt injection escalates when these unanticipated inputs alter the fundamental operations of LLMs, potentially leading to data breaches—a nightmare scenario for any organization. In this worst-case scenario, sensitive information may be exposed, violating user privacy, and potentially incurring legal and financial repercussions. Beyond the immediate harm, these breaches can have a long-lasting impact on a company’s reputation and user confidence. Consequently, understanding the parallels between SQL injection and prompt injection in LLMs underscores the necessity for stringent security measures and the continuous evaluation of system interactions with its environment, thereby fortifying its defenses against such insidious threats.

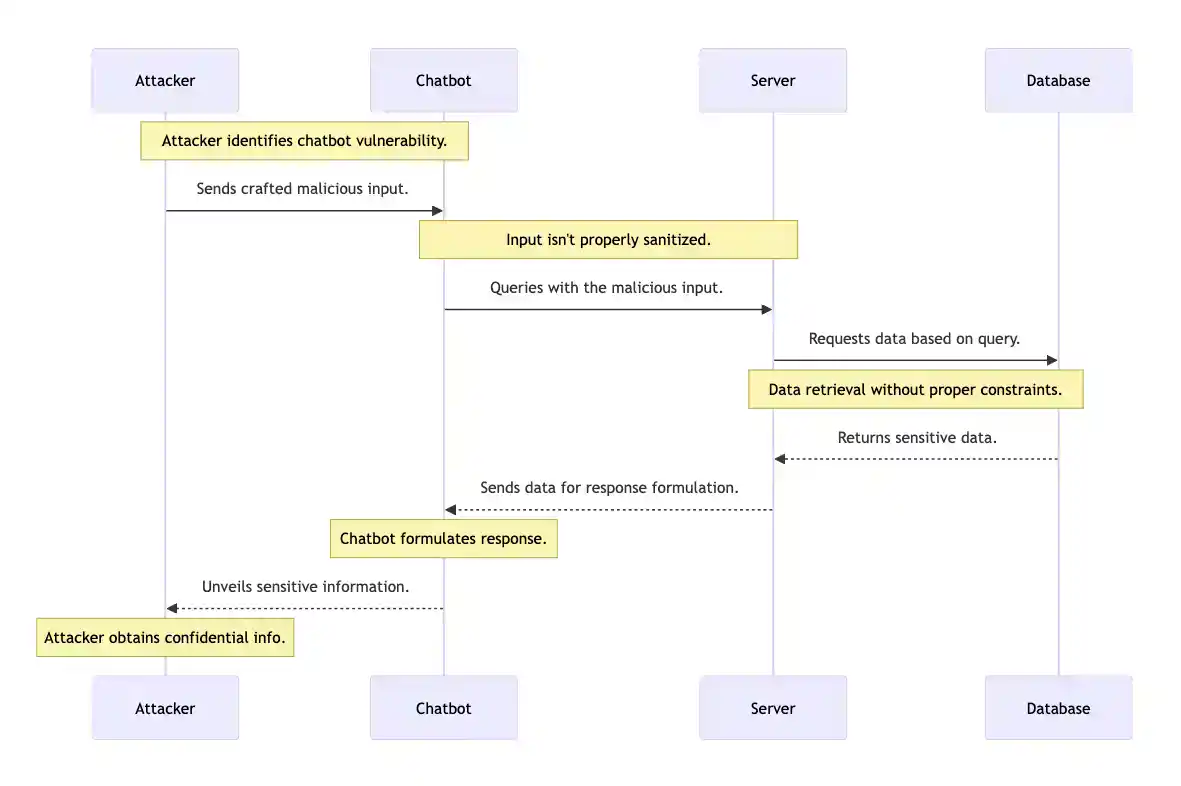

Illustration: The Subtle Perils of Prompt Injection in Action

Imagine this: A bustling company, thriving online, employs a sophisticated chatbot on their website, handling a myriad of customer inquiries around the clock. This digital entity, a product of advanced Language Learning Model (LLM) technology, seamlessly fields common questions, assists with troubleshooting, and even advises on product or service details. It’s a modern convenience, a silent workforce operating in the virtual space the company occupies.

However, a flaw in the system becomes glaringly apparent. An astute attacker identifies a critical vulnerability: the chatbot is susceptible to prompt injection. With malicious intent, they input a deceptive command designed to manipulate the LLM’s processing logic. This unsanitized input compels the chatbot to divulge sensitive data, exposing internal documents, customer details, or other confidential insights. The oversight? A lapse in stringent input sanitization and necessary constraints within the LLM’s operational parameters.

To illustrate this complex interaction, one might envision a diagram, a simplified depiction that lays bare the bones of this digital debacle. This representation, while not exhaustive, highlights the critical junctures at which proper safeguards, had they been implemented, could have averted a breach of this magnitude.

This narrative approach, complete with an evocative illustration, can make the concept of prompt injection more tangible for readers, emphasizing the urgency and significance of robust preventive measures in LLM applications.

The Impact

Prompt injection represents a significant threat in the realm of Language Learning Models (LLMs), particularly as these technologies find widespread use in various sensitive applications. A stark illustration of its potential harm is evident when, for example, a customer service chatbot is subverted, leading it to generate unfitting content or, worse, reveal confidential information. This kind of breach doesn’t just interfere with the intended operational functionality of applications but extends to more profound organizational damage. It can rapidly degrade a company’s carefully built reputation and, importantly, erode the trust that users have placed in the service or the broader business. The ripple effects of such an event could be far-reaching, prompting customer churn or even inviting legal challenges.

The situation escalates further when considering individuals with advanced understanding and skills related to LLMs. If these knowledgeable individuals exploit such vulnerabilities, the resulting issues can extend beyond simple content concerns to technical disruptions. For instance, an orchestrated attack could overload the system, resulting in server crashes, significant slowdowns, or complete unavailability of critical digital services. These types of disruptions not only require costly and time-consuming repair and recovery efforts but also risk a secondary cascade of effects, including loss of business, negative impact on partner relationships, and internal organizational strain.

Addressing these security weaknesses necessitates a multi-faceted approach, acknowledging both the technical and human elements of cybersecurity in LLM applications. It’s not enough to only fortify systems against external breaches. Stakeholders must consider the motivations and means of those who would exploit system vulnerabilities from within or with a deep understanding of the technology. This calls for a combination of robust cybersecurity protocols, ongoing system monitoring, and a culture of security awareness within the organization. By understanding the severity and scope of threats like prompt injection, businesses can more effectively safeguard their systems, data, and user trust in the increasingly digital landscape.

Tips for Prevention

Addressing the potential risks associated with prompt injection requires a comprehensive approach. Here’s how to enhance the security of LLM applications:

Input Sanitization

At its core, input sanitization involves thoroughly examining and filtering the data entered into the LLM. By stripping away or sanitizing strings that could potentially trigger malicious LLM behavior, you reduce the risk of exploitation. This method involves creating a list of ‘allowable’ input characters or types and automatically blocking or modifying anything that doesn’t fit these criteria. It’s not just about removing known harmful inputs, but anticipating new, potentially dangerous inputs that could be manipulated by malicious users.Output Validation

This step goes hand in hand with input sanitization but focuses on the information being produced by the LLM. The process includes setting up protocols that assess the responses from the LLM, ensuring they meet predefined safety and appropriateness standards. By instituting layers of validation checks, you can prevent the system from generating responses that could be deemed inappropriate or sensitive, thereby maintaining the integrity of the interactions and the reputation of the service.Contextual Awareness

Building an LLM with enhanced contextual awareness requires a sophisticated approach to model training. It involves incorporating a diverse range of scenarios and input examples that help the model understand not just the content but the intent behind the inputs. This broader level of understanding enables the LLM to better identify and fend off attempts at manipulation through prompt injection. Advanced techniques in machine learning and natural language processing can be utilized to enable the model to differentiate between typical user interactions and potential threats.Leveraging Experienced LLM Developers

The complexity of safeguarding against prompt injection underscores the need for skilled LLM developers and architects. Professionals in this field bring a wealth of experience and a deep understanding of both the theoretical and practical aspects of LLMs. They are equipped to design systems with built-in safeguards and to anticipate and counteract potential exploitation methods. By staying abreast of the latest in security protocols, threat detection, and machine learning advancements, experienced developers can create resilient LLM applications capable of withstanding prompt injection attempts.

Each of these strategies plays a crucial role in the holistic approach needed to secure LLM applications from the threats posed by prompt injection, ensuring the continuity, reliability, and trustworthiness of services powered by these advanced technologies.

The Role of Experienced Developers and Architects

The looming threat of prompt injection significantly highlights the indispensable role of seasoned LLM application developers and architects in the field of language model technology. These individuals, equipped with extensive knowledge and a profound understanding of the intricacies associated with Language Learning Models, are the first line of defense against the inherent vulnerabilities these systems might face. Their expertise is not just in recognizing potential threats but also in employing preemptive strategies during the foundational stages of application development. It is their insights into the behavioral patterns of LLMs that allow them to navigate the complex landscape of potential security pitfalls effectively.

One of the primary responsibilities of these professionals is to foresee potential security issues that could undermine the application’s integrity. This foresight is crucial in the initial stages of the application’s architectural planning, where strategic measures are integrated to fortify the application against potential breaches. Through methods like input sanitization, they ensure that all incoming data is stripped of any malicious code or intent, preserving the application’s intended function. Additionally, by enhancing the LLM’s ability to discern the broader context in interactions, developers can shield the application from sophisticated attacks that prey on contextual misunderstandings.

Output validation is equally critical, serving as a checkpoint to confirm that the responses generated by the LLM are not only accurate but also free from any content that could be exploited. The philosophy that an experienced developer operates under is the wisdom that prevention is decisively more efficient and less costly than post-incident remedies. Proactive measures in cybersecurity, especially in the delicate field of LLMs, are invaluable. When vulnerabilities are predicted and prevented, organizations avoid not only the financial burdens associated with recovery but also the intangible loss of user trust and brand credibility.

In contrast, reactive approaches, which involve addressing security lapses post-deployment, often culminate in higher costs, ranging from immediate technical fixes to long-term reputational harm. Thus, the foresight and expertise of developers in embedding robust security protocols from the ground up is a critical investment for organizations aiming for resilience in the face of evolving cyber threats. This balanced approach, emphasizing both preventative strategy and robust construction, ensures that LLM applications remain as secure fortresses against the continually shifting landscape of cyber threats.

Conclusion

Understanding and proactively managing vulnerabilities such as prompt injection can significantly enhance the security and functionality of your LLM applications. Prevention is indeed better than cure, especially in the case of LLM-based applications where such threats can have far-reaching implications.

If you are looking to develop your next big LLM-based application, the experts at HyScaler are there to help. Please contact us for more information.